One Of The Best Tips About What Is Cfg In Ai

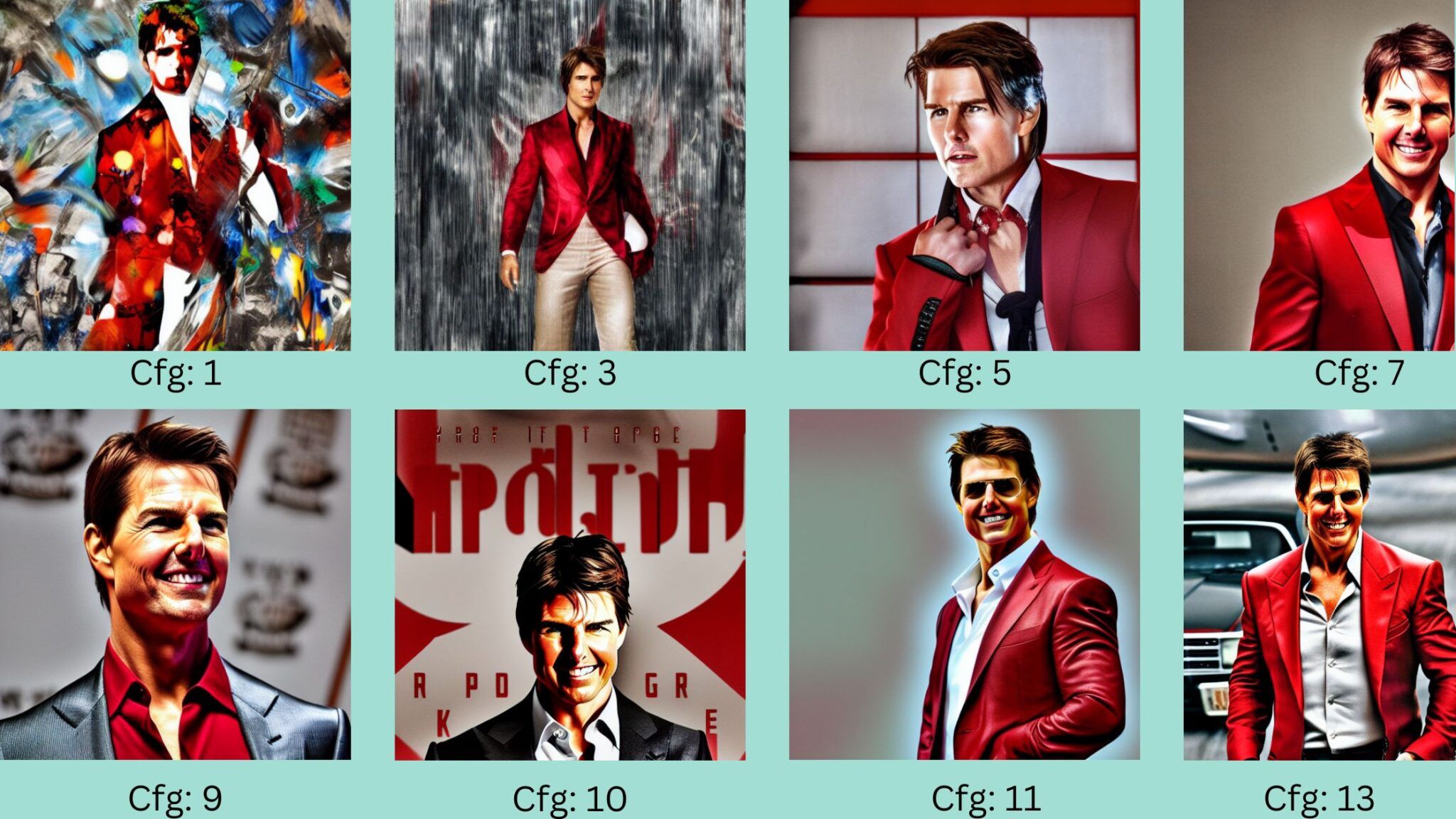

This setting determines how faithfully stable diffusion adheres to your.

Understanding Configuration (Cfg) in Artificial Intelligence: A Deep Dive

The Core of AI Parameterization

In the expansive realm of Artificial Intelligence, the term "Cfg," short for configuration, plays a pivotal role. It's not merely a technical jargon; it's the very fabric that dictates how an AI model behaves and performs. Think of it as the recipe for a complex dish—you need the right ingredients in the right proportions to achieve the desired flavor. In AI, these "ingredients" are the parameters and settings that define the model's architecture and learning process. Without a well-defined configuration, even the most sophisticated algorithms would flounder, unable to adapt or produce meaningful results. So, when we discuss "Cfg" in AI, we're essentially talking about the blueprint that guides the AI's journey from raw data to insightful output. It’s like giving an AI a detailed instruction manual.

Specifically, a configuration file or settings structure within an AI system usually contains variables that control various aspects. These can range from learning rates and batch sizes in neural networks to the number of trees in a random forest. Each parameter has a specific impact, and tweaking them can dramatically alter the model's performance. It's a delicate balancing act, requiring a blend of theoretical understanding and practical experimentation. Imagine trying to tune a musical instrument—you need to know which strings to adjust and by how much to produce the perfect harmony. In AI, this "harmony" is the optimal performance of the model.

The importance of configuration becomes even more apparent when dealing with complex deep learning models. These models often have millions of parameters, and manually adjusting them would be a Herculean task. That's where automated configuration management and hyperparameter tuning come into play. Tools and techniques have been developed to efficiently search the parameter space and find the best combination of settings. It’s a bit like having a robot chef who can automatically adjust the recipe until the dish is perfect. It saves time and ensures consistent results, allowing AI researchers to focus on higher-level tasks.

Moreover, the concept of "Cfg" extends beyond just numerical parameters. It also encompasses architectural choices, such as the number of layers in a neural network or the type of activation functions used. These choices are often made based on the specific problem being addressed and the characteristics of the data. A well-designed configuration is crucial for achieving state-of-the-art performance and ensuring that the AI model is both effective and efficient. It’s not just about making it work, but making it work well.

The Practical Applications of Cfg in AI Development

From Data Pipelines to Model Deployment

The use of configuration files is ubiquitous in AI development, spanning from data preprocessing to model deployment. In the data pipeline, configurations might define how data is cleaned, transformed, and split into training and testing sets. This ensures consistency and reproducibility, which are crucial for reliable AI systems. Think of it as setting up a standardized assembly line in a factory. Each step is precisely defined to ensure the final product meets quality standards. In AI, this "product" is a well-prepared dataset.

During model training, configurations dictate the learning process, influencing how the model learns from the data. Parameters such as learning rate, batch size, and regularization strength are all defined in the configuration. These settings directly affect the model's ability to generalize and avoid overfitting. It’s like teaching a student—you need to adjust your teaching methods and pace based on their learning style and progress. A well-tuned learning process can significantly improve the model's performance.

In model deployment, configurations are used to specify how the model is integrated into a production environment. This includes settings for scaling, resource allocation, and error handling. A robust configuration ensures that the model can handle real-world workloads and provide reliable predictions. It’s like ensuring a car is properly maintained and tuned before a long road trip. You want it to perform smoothly and reliably. AI deployment is no different.

Furthermore, configuration management tools, like YAML or JSON files, are widely used to store and manage these settings. These tools allow for easy version control and collaboration, which are essential for large-scale AI projects. It's like having a well-organized cookbook where you can easily find and modify recipes. This streamlines the development process and ensures that everyone is on the same page. Effective configuration management is key to building scalable and maintainable AI systems.

Hyperparameter Tuning: The Art of Cfg Optimization

Finding the Sweet Spot

Hyperparameter tuning is a critical aspect of AI development, focusing on finding the optimal configuration for a model. This process involves systematically exploring the parameter space and evaluating the model's performance on a validation set. Techniques such as grid search, random search, and Bayesian optimization are commonly used. It's a bit like searching for the perfect blend of ingredients for a new recipe. You need to try different combinations and see which one produces the best results.

Grid search involves exhaustively trying all possible combinations of hyperparameters, which can be computationally expensive for high-dimensional parameter spaces. Random search, on the other hand, randomly samples hyperparameters, which can be more efficient. Bayesian optimization uses a probabilistic model to guide the search, focusing on promising regions of the parameter space. It’s like having a smart assistant who can suggest which combinations are most likely to work. Each method has its strengths and weaknesses, and the choice depends on the specific problem and available resources.

Automated machine learning (AutoML) tools have made hyperparameter tuning more accessible, automating the search process and providing recommendations for optimal settings. These tools can significantly reduce the time and effort required to train high-performing models. It’s like having a self-driving car that can navigate the best route to your destination. AutoML tools are democratizing AI development, making it easier for non-experts to build effective models.

The importance of hyperparameter tuning cannot be overstated. Even small changes in the configuration can have a significant impact on the model's performance. It’s a delicate balancing act, requiring a blend of theoretical understanding and practical experimentation. A well-tuned model can achieve state-of-the-art results and provide valuable insights. It’s about not just making the model work, but making it work optimally.

The Role of Cfg in AI Model Reproducibility

Ensuring Consistency and Reliability

Reproducibility is a cornerstone of scientific research, and AI is no exception. Configuration files play a crucial role in ensuring that AI models can be reproduced and validated by other researchers. By documenting the exact parameters and settings used to train a model, researchers can ensure that their results are transparent and reliable. It’s like providing a detailed lab notebook so that others can replicate your experiments. This fosters trust and accelerates scientific progress.

Version control systems, such as Git, are often used to manage configuration files, allowing researchers to track changes and revert to previous versions if needed. This ensures that the entire development process is auditable and traceable. It’s like having a time machine that allows you to go back and see exactly what was done at each step. This is crucial for debugging and understanding how the model evolved.

Containerization technologies, such as Docker, are also used to package AI models and their dependencies, including configuration files, into self-contained units. This ensures that the model can be deployed and run in any environment, regardless of the underlying infrastructure. It’s like having a portable lab that can be set up anywhere. This simplifies deployment and ensures that the model performs consistently across different platforms.

Furthermore, standardized configuration formats, such as YAML and JSON, facilitate the exchange and sharing of AI models and their settings. This promotes collaboration and accelerates the adoption of best practices. It’s like having a common language that everyone can understand. This makes it easier to share and build upon each other’s work. Reproducibility is essential for building a robust and reliable AI ecosystem.

Future Trends and Developments in AI Configuration

The Evolution of Cfg Management

The field of AI is rapidly evolving, and configuration management is no exception. Future trends include the development of more sophisticated AutoML tools that can automatically optimize configurations for a wider range of AI models. These tools will leverage advanced techniques such as reinforcement learning and meta-learning to find optimal settings more efficiently. It’s like having an AI assistant that can learn from past experiences and make better recommendations. This will further democratize AI development and make it easier for non-experts to build high-performing models.

Another trend is the integration of configuration management with cloud-based AI platforms, allowing for seamless scaling and deployment of models. These platforms will provide tools for monitoring and managing configurations in real-time, ensuring that models can adapt to changing workloads and data distributions. It’s like having a control center that allows you to manage your AI fleet from a single dashboard. This will improve efficiency and reliability, making it easier to deploy and manage AI systems at scale.

The use of explainable AI (XAI) techniques to understand the impact of different configuration settings on model performance is also gaining traction. This will allow researchers to gain deeper insights into how models learn and make decisions, leading to the development of more robust and reliable AI systems. It’s like having a microscope that allows you to see the inner workings of the model. This will improve transparency and trust in AI.

Furthermore, the development of standardized configuration formats